A Mother-and-Daughter Team Have Developed What May Be the World’s First Alzheimer’s Vaccine

Brain inflammation from Alzheimer's disease.

Alzheimer's is a terrible disease that robs a person of their personality and memory before eventually leading to death. It's the sixth-largest killer in the U.S. and, currently, there are 5.8 million Americans living with the disease.

Wang's vaccine is a significant improvement over previous attempts because it can attack the Alzheimer's protein without creating any adverse side effects.

It devastates people and families and it's estimated that Alzheimer's and other forms of dementia will cost the U.S. $290 billion dollars this year alone. It's estimated that it will become a trillion-dollar-a-year disease by 2050.

There have been over 200 unsuccessful attempts to find a cure for the disease and the clinical trial termination rate is 98 percent.

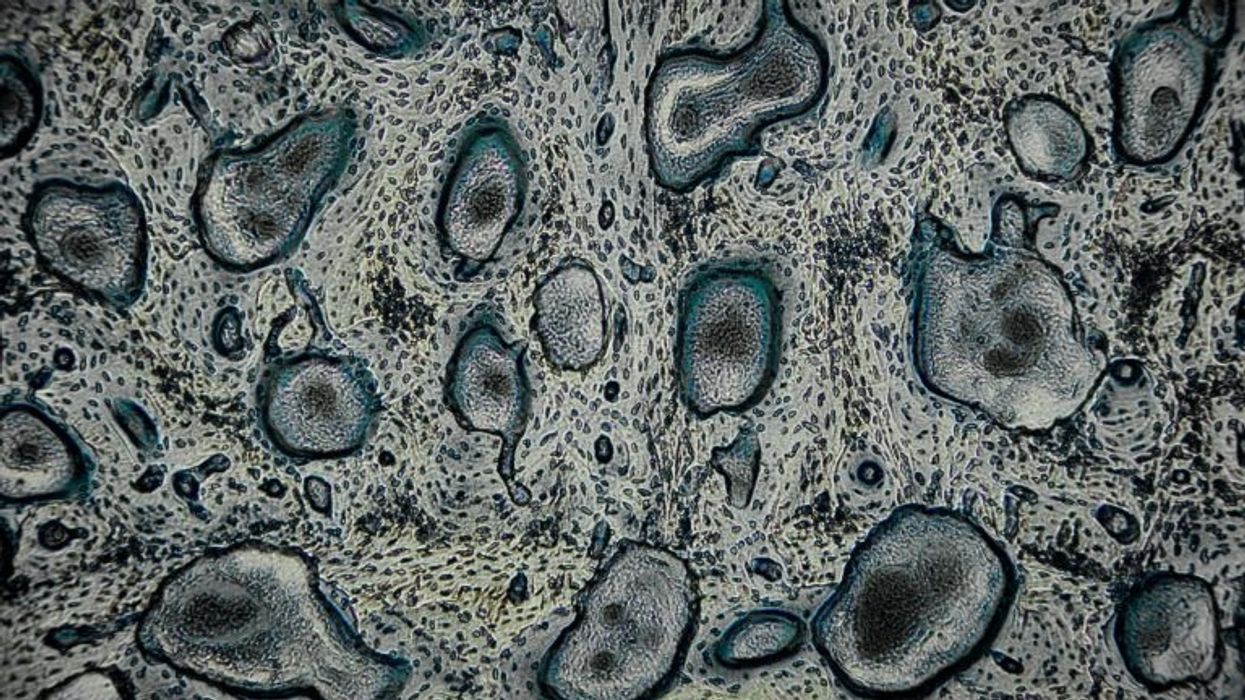

Alzheimer's is caused by plaque deposits that develop in brain tissue that become toxic to brain cells. One of the major hurdles to finding a cure for the disease is that it's impossible to clear out the deposits from the tissue. So scientists have turned their attention to early detection and prevention.

One very encouraging development has come out of the work done by Dr. Chang Yi Wang, PhD. Wang is a prolific bio-inventor; one of her biggest successes is developing a foot-and-mouth vaccine for pigs that has been administered more than three billion times.

Mei Mei Hu

Brainstorm Health / Flickr.

In January, United Neuroscience, a biotech company founded by Yi, her daughter Mei Mei Hu, and son-in-law, Louis Reese, announced the first results from a phase IIa clinical trial on UB-311, an Alzheimer's vaccine.

The vaccine has synthetic versions of amino acid chains that trigger antibodies to attack Alzheimer's protein the blood. Wang's vaccine is a significant improvement over previous attempts because it can attack the Alzheimer's protein without creating any adverse side effects.

"We were able to generate some antibodies in all patients, which is unusual for vaccines," Yi told Wired. "We're talking about almost a 100 percent response rate. So far, we have seen an improvement in three out of three measurements of cognitive performance for patients with mild Alzheimer's disease."

The researchers also claim it can delay the onset of the disease by five years. While this would be a godsend for people with the disease and their families, according to Elle, it could also save Medicare and Medicaid more than $220 billion.

"You'd want to see larger numbers, but this looks like a beneficial treatment," James Brown, director of the Aston University Research Centre for Healthy Ageing, told Wired. "This looks like a silver bullet that can arrest or improve symptoms and, if it passes the next phase, it could be the best chance we've got."

"A word of caution is that it's a small study," says Drew Holzapfel, acting president of the nonprofit UsAgainstAlzheimer's, said according to Elle. "But the initial data is compelling."

The company is now working on its next clinical trial of the vaccine and while hopes are high, so is the pressure. The company has already invested $100 million developing its vaccine platform. According to Reese, the company's ultimate goal is to create a host of vaccines that will be administered to protect people from chronic illness.

"We have a 50-year vision -- to immuno-sculpt people against chronic illness and chronic aging with vaccines as prolific as vaccines for infectious diseases," he told Elle.

[Editor's Note: This article was originally published by Upworthy here and has been republished with permission.]